👋 Hey friends,

Here’s what I’ve got for you this week:

Another snippet from Chapter 3 of The Backend Lowdown

Creator update: Rolling out to YouTube, RSS feed

War Story: Concurrent index creation

Let’s get into it 👇

The Backend Lowdown: Chapter 3 Preview

Every newsletter will include a snippet from my book in progress, The Backend Lowdown, available for $1 right now on Gumroad!

Side note: Chapter 3 was just released! Go check it out!

Ghost Queries from Plugins/Serializers

Plugins, serializers, or model callbacks can quietly issue extra SQL queries behind the scenes, often outside the main application code, leading to unexpected performance regressions. These hidden "ghost queries" can go unnoticed during development but wreak havoc under load.

How serializers or callbacks sneak in extra queries

Many frameworks and ORMs allow plugins or serializers to define callbacks that fire during object hydration or rendering and in Rails/ActiveRecord, these can issue hidden SQL per record. Common ActiveRecord patterns that introduce ghost queries include:

Association touch-ups in serializers

class UserSerializer < ActiveModel::Serializer

attributes :id, :name, :email

has_one :profile

def profile

object.profile # issues a query per user if not eager-loaded

end

endRendering a collection with User.all will fire one SELECT for users, then one SELECT per user for profiles unless you eager-load.

Computed attributes with callbacks or methods

class Order < ApplicationRecord

after_find :load_discount_name

def load_discount_name

self.discount_name = Discount.find(discount_id).name

end

endFetching orders (e.g., Order.where(status: 'pending')) triggers an extra query per order to look up its discount name.

Callback chains in models

class Product < ApplicationRecord

after_initialize :track_initial_load

def track_initial_load

AnalyticsEvent.create!(product_id: id, event: 'initialized')

end

endEvery instantiation or fetch of Product records fires AnalyticsEvent.create!, causing unexpected writes at render time.

These patterns often slip past notice in development but multiply under load, leading to N+1-style bloat hidden within serializers or model callbacks.

Creator Update: YouTube and RSS Feed

Just some small creator news: I launched a YouTube channel! For now I’ll be sticking to posting YouTube Shorts, but may roll out some longer form videos down the line. If you’d rather watch my stuff over there, please feel free to “like and subscribe”!

Even smaller news: Someone reached out asking for an RSS feed of the newsletter and luckily Beehiiv has that capability baked in so if you also prefer to get your newsletter that way here’s the RSS link.

War Story: Concurrent Index Creation

(names, dates, and schemas changed to protect the innocent)

An engineer merges a “harmless” migration:

CREATE INDEX CONCURRENTLY IF NOT EXISTS idx_client_topic_channel_updated_at

ON user_topic_subscriptions (

client_id,

topic,

channel,

updated_at DESC

);The goal? Turbo-charge a query inside a background job. Five minutes later the APM lights up: database requests start timing out and an incident war room spins up.

“Index creation was indeed related. Table traffic + size caused a full lock during finalization.”

What actually happened?

Two long scans & a lot of waiting -

CREATE INDEX CONCURRENTLYruns three transactions:insert an invalid placeholder index

scan table twice

then mark the index valid.

Before each phase it must wait for every open transaction on that table to finish.

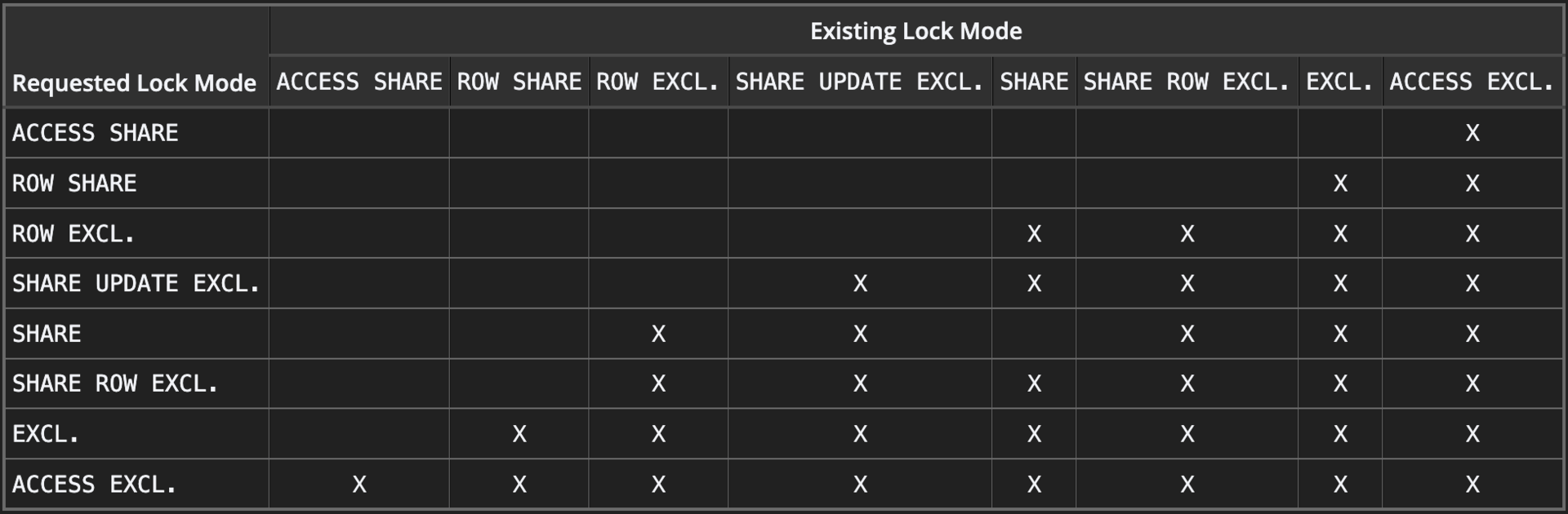

Lock upgrade at the finish line - During the “make it valid” step Postgres grabs a

ShareUpdateExclusiveLockon the table. That lock blocks anyINSERT/UPDATE/DELETE(it conflicts with ordinaryRowExclusiveLocks).

Postgres Conflicting Lock Modes

Busy table + long-running transactions = traffic jam:

The affected table receives a steady stream of writes.

A single analytics transaction that started before the index build kept running.

The index builder had to wait for that xact → it sat first in the lock queue.

All new writers stacked up behind it, exhausting the connection pool.

App threads timed-out, alarms were triggered

How it was fixed

Canceled the migration (

pg_cancel_backend), restoring write flow in seconds.Re-ran the index after hours with

SET lock_timeout = '5s'so it would bail instead of block if it couldn’t grab its finishing lock.

Take-aways

“Concurrent” doesn’t mean “lock-free.” It avoids a long

ACCESS EXCLUSIVElock, but it still needs a brief table-level lock that writers will feel.Always check for long-running queries before building big indexes, use

lock_timeout, and schedule migrations when traffic is low. Otherwise your “performance” fix might be the outage story in someone else’s newsletter next week.

That’s a wrap for this week. If something here made your day smoother, feel free to reply and tell me about it. And if you think a friend or teammate would enjoy this too, I’d be grateful if you shared it with them.

Until next time,

Steven